Vercel AI SDK

The Vercel AI SDK gives us a unified surface for calling modern language models, while its UI library (AI SDK UI) offers high-level hooks—useChat, useCompletion, and useObject—that manage inputs, streamed updates, and errors for you.

To plug llm-agent into that UI layer, we need to speak the SDK’s Data Stream Protocol over Server-Sent Events (SSE).

The adapter shown here lives in the examples directory today. It is not bundled with the core packages yet.

Using the code in this guide enables integrating ai-sdk with any llm-sdk/llm-agent backend, including in Go and Rust, not just Node.js.

Example adapter

Section titled “Example adapter”

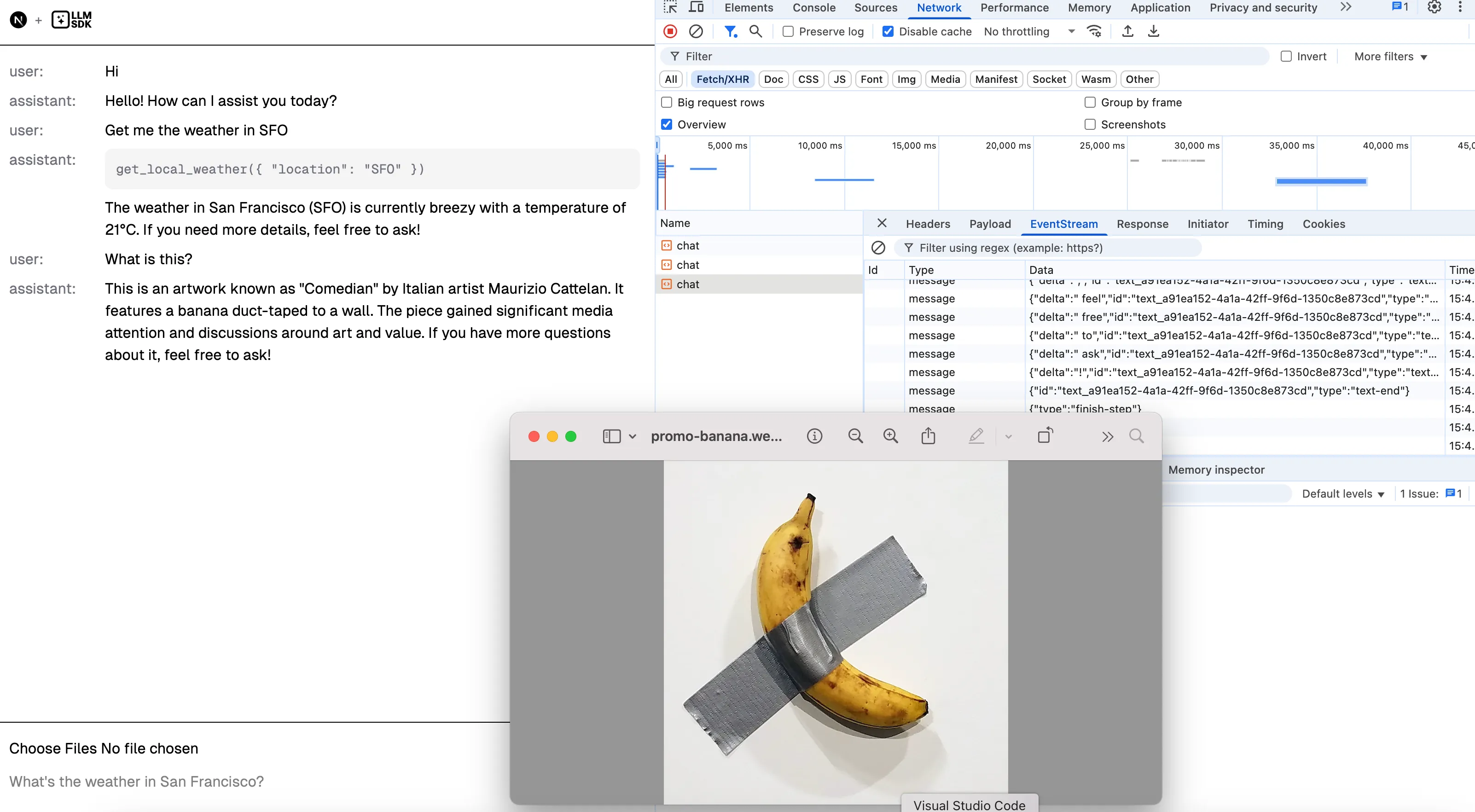

Each agent package ships an examples/ai-sdk-ui project that does two jobs:

- Rehydrate the AI SDK UI request history with its UIMessage into llm-agent

AgentItems. - Translate

AgentStreamEvents into the protocol’sstart,*-delta,*-end,tool-*, andfinishmessages, writing them to an SSE response with thex-vercel-ai-ui-message-stream: v1header.

Below is the full reference implementation in every runtime. Choose the one that matches your stack and adapt it to your server framework.

The repository also includes a Next.js frontend that uses the AI SDK UI hooks to connect to any of these backends for testing.

import { Agent, type AgentItem, type AgentItemTool, type AgentStreamEvent,} from "@hoangvvo/llm-agent";import { zodTool } from "@hoangvvo/llm-agent/zod";import { mapMimeTypeToAudioFormat, type ContentDelta, type LanguageModelMetadata, type Message, type Part, type ReasoningPartDelta, type TextPart, type TextPartDelta, type ToolCallPartDelta,} from "@hoangvvo/llm-sdk";import { randomUUID } from "node:crypto";import http from "node:http";import z from "zod";import { getModel } from "./get-model.ts";

// ==== Vercel AI SDK types ====

type UIMessageRole = "system" | "user" | "assistant";

type ProviderMetadata = unknown;

type TextUIPart = { type: "text"; text: string; state?: "streaming" | "done"; providerMetadata?: ProviderMetadata;};

type ReasoningUIPart = { type: "reasoning"; text: string; state?: "streaming" | "done"; providerMetadata?: ProviderMetadata;};

type StepStartUIPart = { type: "step-start";};

type SourceUrlUIPart = { type: "source-url"; sourceId: string; url: string; title?: string; providerMetadata?: ProviderMetadata;};

type SourceDocumentUIPart = { type: "source-document"; sourceId: string; mediaType: string; title: string; filename?: string; providerMetadata?: ProviderMetadata;};

type FileUIPart = { type: "file"; url: string; mediaType: string; filename?: string; providerMetadata?: ProviderMetadata;};

type DataUIPart = { type: `data-${string}`; id?: string; data: unknown; transient?: boolean;};

type ToolInvocationInputStreaming = { toolCallId: string; state: "input-streaming"; input?: unknown; providerExecuted?: boolean;};

type ToolInvocationInputAvailable = { toolCallId: string; state: "input-available"; input: unknown; providerExecuted?: boolean; callProviderMetadata?: ProviderMetadata;};

type ToolInvocationOutputAvailable = { toolCallId: string; state: "output-available"; input: unknown; output: unknown; providerExecuted?: boolean; callProviderMetadata?: ProviderMetadata; preliminary?: boolean;};

type ToolInvocationOutputError = { toolCallId: string; state: "output-error"; input?: unknown; rawInput?: unknown; errorText: string; providerExecuted?: boolean; callProviderMetadata?: ProviderMetadata;};

type UIToolInvocation = | ToolInvocationInputStreaming | ToolInvocationInputAvailable | ToolInvocationOutputAvailable | ToolInvocationOutputError;

type ToolUIPart = { type: `tool-${string}` } & UIToolInvocation;

type DynamicToolUIPart = | ({ type: "dynamic-tool"; toolName: string } & ToolInvocationInputStreaming) | ({ type: "dynamic-tool"; toolName: string } & ToolInvocationInputAvailable) | ({ type: "dynamic-tool"; toolName: string } & ToolInvocationOutputAvailable) | ({ type: "dynamic-tool"; toolName: string } & ToolInvocationOutputError);

type UIMessagePart = | TextUIPart | ReasoningUIPart | ToolUIPart | DynamicToolUIPart | SourceUrlUIPart | SourceDocumentUIPart | FileUIPart | DataUIPart | StepStartUIPart;

type UIMessage = { id: string; role: UIMessageRole; parts: UIMessagePart[]; metadata?: unknown;};

// ==== Vercel AI SDK stream protocol types ====

type TextStartMessageChunk = { type: "text-start"; id: string; providerMetadata?: ProviderMetadata;};

type TextDeltaMessageChunk = { type: "text-delta"; id: string; delta: string; providerMetadata?: ProviderMetadata;};

type TextEndMessageChunk = { type: "text-end"; id: string; providerMetadata?: ProviderMetadata;};

type ReasoningStartMessageChunk = { type: "reasoning-start"; id: string; providerMetadata?: ProviderMetadata;};

type ReasoningDeltaMessageChunk = { type: "reasoning-delta"; id: string; delta: string; providerMetadata?: ProviderMetadata;};

type ReasoningEndMessageChunk = { type: "reasoning-end"; id: string; providerMetadata?: ProviderMetadata;};

type ErrorMessageChunk = { type: "error"; errorText: string;};

type ToolInputStartMessageChunk = { type: "tool-input-start"; toolCallId: string; toolName: string; providerExecuted?: boolean; dynamic?: boolean;};

type ToolInputDeltaMessageChunk = { type: "tool-input-delta"; toolCallId: string; inputTextDelta: string;};

type ToolInputAvailableMessageChunk = { type: "tool-input-available"; toolCallId: string; toolName: string; input: unknown; providerExecuted?: boolean; providerMetadata?: ProviderMetadata; dynamic?: boolean;};

type ToolInputErrorMessageChunk = { type: "tool-input-error"; toolCallId: string; toolName: string; input: unknown; errorText: string; providerExecuted?: boolean; providerMetadata?: ProviderMetadata; dynamic?: boolean;};

type ToolOutputAvailableMessageChunk = { type: "tool-output-available"; toolCallId: string; output: unknown; providerExecuted?: boolean; dynamic?: boolean; preliminary?: boolean;};

type ToolOutputErrorMessageChunk = { type: "tool-output-error"; toolCallId: string; errorText: string; providerExecuted?: boolean; dynamic?: boolean;};

type SourceUrlMessageChunk = { type: "source-url"; sourceId: string; url: string; title?: string; providerMetadata?: ProviderMetadata;};

type SourceDocumentMessageChunk = { type: "source-document"; sourceId: string; mediaType: string; title: string; filename?: string; providerMetadata?: ProviderMetadata;};

type FileMessageChunk = { type: "file"; url: string; mediaType: string; providerMetadata?: ProviderMetadata;};

type DataUIMessageChunk = { type: `data-${string}`; id?: string; data: unknown; transient?: boolean;};

type StepStartMessageChunk = { type: "start-step";};

type StepFinishMessageChunk = { type: "finish-step";};

type StartMessageChunk = { type: "start"; messageId?: string; messageMetadata?: unknown;};

type FinishMessageChunk = { type: "finish"; messageMetadata?: unknown;};

type AbortMessageChunk = { type: "abort";};

type MessageMetadataMessageChunk = { type: "message-metadata"; messageMetadata: unknown;};

type UIMessageChunk = | TextStartMessageChunk | TextDeltaMessageChunk | TextEndMessageChunk | ReasoningStartMessageChunk | ReasoningDeltaMessageChunk | ReasoningEndMessageChunk | ErrorMessageChunk | ToolInputStartMessageChunk | ToolInputDeltaMessageChunk | ToolInputAvailableMessageChunk | ToolInputErrorMessageChunk | ToolOutputAvailableMessageChunk | ToolOutputErrorMessageChunk | SourceUrlMessageChunk | SourceDocumentMessageChunk | FileMessageChunk | DataUIMessageChunk | StepStartMessageChunk | StepFinishMessageChunk | StartMessageChunk | FinishMessageChunk | AbortMessageChunk | MessageMetadataMessageChunk;

type MessageStreamEvent = UIMessageChunk;

type ChatTrigger = "submit-message" | "regenerate-message";

interface ChatRequestBody { id?: string; trigger?: ChatTrigger; messageId?: string; messages?: UIMessage[]; provider?: string; modelId?: string; metadata?: LanguageModelMetadata; [key: string]: unknown;}

// ==== Agent setup ====

type ChatContext = Record<string, never>;

const timeTool = zodTool({ name: "get_current_time", description: "Get the current server time in ISO 8601 format.", parameters: z.object({}), execute() { return { content: [ { type: "text", text: new Date().toISOString(), } satisfies TextPart, ], is_error: false, }; },});

const weatherTool = zodTool({ name: "get_local_weather", description: "Return a lightweight weather forecast for a given city using mock data.", parameters: z.object({ location: z.string().describe("City name to look up weather for."), }), execute({ location }) { const conditions = ["sunny", "cloudy", "rainy", "breezy"]; const condition = conditions[location.length % conditions.length]; return { content: [ { type: "text", text: JSON.stringify({ location, condition, temperatureC: 18 + (location.length % 10), }), } satisfies TextPart, ], is_error: false, }; },});

/** * Builds an `Agent` that mirrors the model/provider configuration requested by * the UI. The returned agent streams `AgentStreamEvent`s that we later adapt * into Vercel's data stream protocol. */function createAgent( provider: string, modelId: string, metadata?: LanguageModelMetadata,) { const model = getModel(provider, modelId, metadata);

return new Agent<ChatContext>({ name: "UIExampleAgent", model, instructions: [ "You are an assistant orchestrated by the llm-agent SDK.", "Use the available tools when they can provide better answers.", ], tools: [timeTool, weatherTool], });}

// ==== Streaming helpers ====

interface TextStreamState { id: string;}

interface ReasoningStreamState { id: string;}

interface ToolCallStreamState { toolCallId: string; toolName: string; argsBuffer: string; didEmitStart: boolean;}

interface SSEWriter { write: (event: MessageStreamEvent) => void; close: () => void;}

/** * Wraps the raw `ServerResponse` with helpers for emitting Server-Sent Events. * Vercel's AI SDK data stream protocol uses SSE under the hood, so having a * dedicated writer keeps the transport concerns isolated from the adapter * logic that follows. */function createSSEWriter(res: http.ServerResponse): SSEWriter { return { write(event: MessageStreamEvent) { res.write(`data: ${JSON.stringify(event)}\n\n`); }, close() { res.write("data: [DONE]\n\n"); res.end(); }, };}

/** * Attempts to parse a JSON payload coming from streaming tool arguments or * tool results. The protocol expects structured data, but tool authors can * return arbitrary strings. If parsing fails we fall back to the raw string so * the UI can still render something meaningful. */function safeJsonParse(rawText: string): unknown { try { return JSON.parse(rawText); } catch { return rawText; }}

/** * Bridges `AgentStreamEvent`s to the Vercel AI SDK data stream protocol. * * - If you use this class with `llm-agent`, pass all events emitted by * `Agent.runStream` to the `write` method. * - If you use this class with `llm-sdk` directly, pass all events emitted by * `LanguageModel.stream` to the `writeDelta` method. */class DataStreamProtocolAdapter { readonly #writer: SSEWriter; readonly #textStateMap = new Map<number, TextStreamState>(); readonly #reasoningStateMap = new Map<number, ReasoningStreamState>(); readonly #toolCallStateMap = new Map<number, ToolCallStreamState>(); #stepHasStarted = false; #closed = false;

constructor(res: http.ServerResponse) { this.#writer = createSSEWriter(res); const messageId = `msg_${randomUUID()}`; this.#writer.write({ type: "start", messageId }); }

/** * Consumes one `AgentStreamEvent` emitted by `Agent.runStream`. Each event is * translated into the corresponding data stream chunks expected by the AI * SDK frontend. */ write(event: AgentStreamEvent): void { if (this.#closed) { return; }

switch (event.event) { case "partial": this.#ensureStepStarted(); if (event.delta) { this.writeDelta(event.delta); } break; case "item": this.#finishStep(); if (event.item.type === "tool") { this.#ensureStepStarted(); this.#writeForToolItem(event.item); this.#finishStep(); } break; case "response": // The final agent response does not translate to an extra stream part. break; } }

/** * Emits an error chunk so the frontend can surface failures alongside the * running message instead of silently terminating the stream. */ emitError(errorText: string): void { if (this.#closed) { return; } this.#writer.write({ type: "error", errorText }); }

/** * Flushes any open stream parts, emits the terminating `finish` message, and * ends the SSE connection. */ close(): void { if (this.#closed) { return; } this.#finishStep(); this.#writer.write({ type: "finish" }); this.#writer.close(); this.#closed = true; }

#ensureStepStarted(): void { if (this.#stepHasStarted) { return; } this.#stepHasStarted = true; this.#writer.write({ type: "start-step" }); }

#finishStep(): void { if (!this.#stepHasStarted) { return; } this.#flushStates(); this.#stepHasStarted = false; this.#writer.write({ type: "finish-step" }); }

#flushStates(): void { for (const [index, textState] of this.#textStateMap) { this.#writer.write({ type: "text-end", id: textState.id }); this.#textStateMap.delete(index); }

for (const [index, reasoningState] of this.#reasoningStateMap) { this.#writer.write({ type: "reasoning-end", id: reasoningState.id }); this.#reasoningStateMap.delete(index); }

for (const [index, toolCallState] of this.#toolCallStateMap) { const { toolCallId, toolName, argsBuffer } = toolCallState; if (toolCallId && toolName && argsBuffer.length > 0) { this.#writer.write({ type: "tool-input-available", toolCallId, toolName, input: safeJsonParse(argsBuffer), }); } this.#toolCallStateMap.delete(index); } }

/** * Consumes one `ContentDelta` emitted by `LanguageModel.stream`. Each delta is * translated into the corresponding data stream chunks expected by the AI * SDK frontend. */ writeDelta(contentDelta: ContentDelta): void { switch (contentDelta.part.type) { case "text": this.#writeForTextPartDelta(contentDelta.index, contentDelta.part); break; case "reasoning": this.#writeForReasoningPartDelta(contentDelta.index, contentDelta.part); break; case "audio": this.#flushStates(); break; case "image": this.#flushStates(); break; case "tool-call": this.#writeForToolCallPartDelta(contentDelta.index, contentDelta.part); break; } }

#writeForTextPartDelta(index: number, part: TextPartDelta): void { let existingTextState = this.#textStateMap.get(index); if (!existingTextState) { this.#flushStates(); existingTextState = { id: `text_${randomUUID()}` }; this.#textStateMap.set(index, existingTextState); this.#writer.write({ type: "text-start", id: existingTextState.id }); }

this.#writer.write({ type: "text-delta", id: existingTextState.id, delta: part.text, }); }

#writeForReasoningPartDelta(index: number, part: ReasoningPartDelta): void { let existingReasoningState = this.#reasoningStateMap.get(index); if (!existingReasoningState) { this.#flushStates(); existingReasoningState = { id: `reasoning_${part.id ?? randomUUID()}` }; this.#reasoningStateMap.set(index, existingReasoningState); this.#writer.write({ type: "reasoning-start", id: existingReasoningState.id, }); }

this.#writer.write({ type: "reasoning-delta", id: existingReasoningState.id, delta: part.text, }); }

#writeForToolCallPartDelta(index: number, part: ToolCallPartDelta): void { let existingToolCallState = this.#toolCallStateMap.get(index); if (!existingToolCallState) { this.#flushStates(); existingToolCallState = { toolCallId: part.tool_call_id ?? "", toolName: part.tool_name ?? "", argsBuffer: "", didEmitStart: false, }; this.#toolCallStateMap.set(index, existingToolCallState); }

existingToolCallState.toolCallId = part.tool_call_id ?? existingToolCallState.toolCallId; existingToolCallState.toolName = part.tool_name ?? existingToolCallState.toolName;

if ( !existingToolCallState.didEmitStart && existingToolCallState.toolCallId.length > 0 && existingToolCallState.toolName.length > 0 ) { existingToolCallState.didEmitStart = true; this.#writer.write({ type: "tool-input-start", toolCallId: existingToolCallState.toolCallId, toolName: existingToolCallState.toolName, }); }

if (part.args) { existingToolCallState.argsBuffer += part.args; this.#writer.write({ type: "tool-input-delta", toolCallId: existingToolCallState.toolCallId, inputTextDelta: part.args, }); } }

#writeForToolItem(item: AgentItemTool): void { this.#flushStates(); const textParts = item.output .filter((part): part is TextPart => part.type === "text") .map((part) => part.text) .join("");

const hasTextOutput = textParts.length > 0; const parsedOutput = hasTextOutput ? safeJsonParse(textParts) : item.output;

this.#writer.write({ type: "tool-output-available", toolCallId: item.tool_call_id, output: parsedOutput, }); }}

// ==== Adapter layers ====

/** * Converts UI message parts produced by the Vercel AI SDK components back into * the core `Part` representation understood by `@hoangvvo/llm-sdk`. This lets * us replay prior chat history into the agent while preserving tool calls and * intermediate reasoning steps. */function uiMessagePartToPart(part: UIMessagePart): Part[] { if (part.type === "text") { return [ { type: "text", text: part.text, }, ]; } if (part.type === "reasoning") { return [ { type: "reasoning", text: part.text, }, ]; } if (part.type === "dynamic-tool") { return [ { type: "tool-call", args: part.input as Record<string, unknown>, tool_call_id: part.toolCallId, tool_name: part.toolName, }, ]; } if (part.type === "file") { // part.url is in the format of "data:<mediaType>;base64,<data>" // We only interest in the raw base64 data for our representation let data: string; const sepIndex = part.url.indexOf(","); if (sepIndex !== -1) { data = part.url.slice(sepIndex + 1); } else { data = part.url; } if (part.mediaType.startsWith("image/")) { return [ { type: "image", data: data, mime_type: part.mediaType, }, ]; } if (part.mediaType.startsWith("audio/")) { return [ { type: "audio", data: data, format: mapMimeTypeToAudioFormat(part.mediaType), }, ]; } if (part.mediaType.startsWith("text/")) { return [ { type: "text", text: Buffer.from(data, "base64").toString("utf-8"), }, ]; } // Unsupported file type return []; } if (part.type.startsWith("tool-")) { const toolUIPart = part as ToolUIPart; const toolName = part.type.slice("tool-".length); switch (toolUIPart.state) { case "input-available": return [ { type: "tool-call", args: toolUIPart.input as Record<string, unknown>, tool_call_id: toolUIPart.toolCallId, tool_name: toolName, }, ]; case "output-available": return [ { type: "tool-call", args: toolUIPart.input as Record<string, unknown>, tool_call_id: toolUIPart.toolCallId, tool_name: toolName, }, { type: "tool-result", content: [ { type: "text", text: JSON.stringify(toolUIPart.output), }, ], tool_call_id: toolUIPart.toolCallId, tool_name: toolName, }, ]; case "output-error": return [ { type: "tool-call", args: toolUIPart.input as Record<string, unknown>, tool_call_id: toolUIPart.toolCallId, tool_name: toolName, }, { type: "tool-result", content: [ { type: "text", text: toolUIPart.errorText, }, ], tool_call_id: toolUIPart.toolCallId, tool_name: toolName, is_error: true, }, ]; } } return [];}

/** * Flattens the UI message history into an `Message[]` so it can be passed to * `Agent.runStream`. The agent expects user, assistant, and tool messages as * separate timeline entries; this helper enforces the ordering invariants while * translating the UI-specific tool call format. */function uiMessagesToMessages(messages: UIMessage[]): Message[] { const items: Message[] = [];

// We will work with all AgentItemMessage of role "user", "assistant", and "tool" // There can only be two possible sequences: // - user -> assistant -> user -> assistant ... // - user -> assistant -> tool -> assistant -> tool -> assistant ...

for (const message of messages) { switch (message.role) { case "user": { const parts = message.parts.flatMap(uiMessagePartToPart); if (parts.length > 0) { items.push({ role: "user", content: parts, }); } break; } case "assistant": { // The assistant case is a bit tricky because the message may contain // tool calls and tool results. UIMessage does not have a specialized // role for tool results. const parts = message.parts.flatMap(uiMessagePartToPart); for (const part of parts) { switch (part.type) { // Handle assistant final output parts case "text": case "reasoning": case "audio": case "image": { // If the last item is an assistant message, append to it const lastItem = items[items.length - 1]; const secondLastItem = items[items.length - 2]; if (lastItem?.role === "assistant") { lastItem.content.push(part); } else if ( lastItem?.role === "tool" && secondLastItem?.role === "assistant" ) { secondLastItem.content.push(part); } else { items.push({ role: "assistant", content: [part], }); } break; } case "tool-call": { const lastItem = items[items.length - 1]; const secondLastItem = items[items.length - 2]; if (lastItem?.role === "assistant") { lastItem.content.push(part); } else if ( lastItem?.role === "tool" && secondLastItem?.role === "assistant" ) { secondLastItem.content.push(part); } else { items.push({ role: "assistant", content: [part], }); } break; } case "tool-result": { const lastItem = items[items.length - 1]; if (lastItem?.role === "tool") { lastItem.content.push(part); } else { items.push({ role: "tool", content: [part], }); } } } } break; } } }

return items;}

// ==== HTTP handlers ====

/** * HTTP handler for the `/api/chat` endpoint. It validates the incoming * `ChatRequestBody`, rehydrates the chat history, and streams the agent run * back to the frontend using `DataStreamProtocolAdapter` so the response follows * the Vercel AI SDK data streaming protocol. */async function handleChatRequest( req: http.IncomingMessage, res: http.ServerResponse,) { try { const bodyText = await readRequestBody(req);

let body: ChatRequestBody; try { body = JSON.parse(bodyText) as ChatRequestBody; } catch (err) { res.writeHead(400, { "Content-Type": "application/json" }); res.end( JSON.stringify({ error: err instanceof Error ? err.message : "Invalid request body", }), ); return; }

const provider = typeof body.provider === "string" ? body.provider : "openai"; const modelId = typeof body.modelId === "string" ? body.modelId : "gpt-4o-mini"; const agent = createAgent(provider, modelId, body.metadata);

const uiHistory = Array.isArray(body.messages) ? body.messages : []; const historyMessages = uiMessagesToMessages(uiHistory); const items: AgentItem[] = historyMessages.map((message) => ({ type: "message", ...message, }));

res.writeHead(200, { "Content-Type": "text/event-stream", "Cache-Control": "no-cache, no-transform", Connection: "keep-alive", "x-vercel-ai-ui-message-stream": "v1", "Access-Control-Allow-Origin": "*", });

res.flushHeaders?.();

const adapter = new DataStreamProtocolAdapter(res);

let clientClosed = false; req.on("close", () => { clientClosed = true; });

const stream = agent.runStream({ context: {}, input: items, });

try { for await (const event of stream) { if (clientClosed) { break; } adapter.write(event); } } catch (err) { console.error(err); adapter.emitError(err instanceof Error ? err.message : "Unknown error"); }

if (clientClosed && typeof stream.return === "function") { await stream.throw(new Error("Client closed connection")); }

if (!clientClosed) { adapter.close(); } } catch (err) { console.error(err); res.writeHead(500, { "Content-Type": "application/json" }); res.end( JSON.stringify({ error: err instanceof Error ? err.message : "Unknown error", }), ); }}

/** * Accumulates the raw request payload so we can decode the JSON `ChatRequestBody`. */function readRequestBody(req: http.IncomingMessage): Promise<string> { return new Promise((resolve, reject) => { let data = ""; req.on("data", (chunk) => { data += String(chunk); }); req.on("end", () => resolve(data)); req.on("error", reject); });}

const port = 8000;

http .createServer((req, res) => { if (req.method === "OPTIONS") { res.writeHead(204, { "Access-Control-Allow-Origin": "*", "Access-Control-Allow-Headers": "content-type", }); res.end(); return; }

if (req.method === "POST" && req.url === "/api/chat") { void handleChatRequest(req, res); return; }

res.writeHead(404, { "Content-Type": "application/json" }); res.end(JSON.stringify({ error: "Not found" })); }) .listen(port, () => { console.log( `AI SDK UI example server listening on http://localhost:${port}`, ); });package main

import ( "context" "encoding/base64" "encoding/json" "errors" "fmt" "io" "log" "net/http" "strings" "sync" "time"

"github.com/google/uuid" llmagent "github.com/hoangvvo/llm-sdk/agent-go" "github.com/hoangvvo/llm-sdk/agent-go/examples" llmsdk "github.com/hoangvvo/llm-sdk/sdk-go" "github.com/hoangvvo/llm-sdk/sdk-go/utils/partutil")

// ==== Vercel AI SDK types ====

type uiMessageRole string

const ( uiRoleSystem uiMessageRole = "system" uiRoleUser uiMessageRole = "user" uiRoleAssistant uiMessageRole = "assistant")

type providerMetadata = any

type uiMessage struct { ID string `json:"id"` Role uiMessageRole `json:"role"` Parts []uiPart `json:"parts"` Metadata any `json:"metadata,omitempty"`}

type uiPart struct { Text *textUIPart Reasoning *reasoningUIPart DynamicTool *dynamicToolUIPart Tool *toolUIPart File *fileUIPart}

func (p *uiPart) UnmarshalJSON(data []byte) error { var base baseUIPart if err := json.Unmarshal(data, &base); err != nil { return err } switch { case base.Type == "text": var part textUIPart if err := json.Unmarshal(data, &part); err != nil { return err } p.Text = &part case base.Type == "reasoning": var part reasoningUIPart if err := json.Unmarshal(data, &part); err != nil { return err } p.Reasoning = &part case base.Type == "dynamic-tool": var part dynamicToolUIPart if err := json.Unmarshal(data, &part); err != nil { return err } p.DynamicTool = &part case base.Type == "file": var part fileUIPart if err := json.Unmarshal(data, &part); err != nil { return err } p.File = &part default: if strings.HasPrefix(base.Type, "tool-") { var part toolUIPart if err := json.Unmarshal(data, &part); err != nil { return err } part.rawType = base.Type if part.ToolName == "" { part.ToolName = strings.TrimPrefix(base.Type, "tool-") } p.Tool = &part } else { p.Tool = nil } } return nil}

func (p uiPart) MarshalJSON() ([]byte, error) { switch { case p.Text != nil: return json.Marshal(struct { Type string `json:"type"` *textUIPart }{ Type: "text", textUIPart: p.Text, }) case p.Reasoning != nil: return json.Marshal(struct { Type string `json:"type"` *reasoningUIPart }{ Type: "reasoning", reasoningUIPart: p.Reasoning, }) case p.DynamicTool != nil: return json.Marshal(struct { Type string `json:"type"` *dynamicToolUIPart }{ Type: "dynamic-tool", dynamicToolUIPart: p.DynamicTool, }) case p.File != nil: return json.Marshal(struct { Type string `json:"type"` *fileUIPart }{ Type: "file", fileUIPart: p.File, }) case p.Tool != nil: typeValue := p.Tool.rawType if typeValue == "" { if p.Tool.ToolName != "" { typeValue = "tool-" + p.Tool.ToolName } else { typeValue = "tool" } } return json.Marshal(struct { Type string `json:"type"` *toolUIPart }{ Type: typeValue, toolUIPart: p.Tool, }) default: return nil, fmt.Errorf("uiPart marshal: no variant populated") }}

type chatRequestBody struct { ID string `json:"id,omitempty"` Trigger string `json:"trigger,omitempty"` MessageID string `json:"messageId,omitempty"` Messages []uiMessage `json:"messages"` Provider string `json:"provider,omitempty"` ModelID string `json:"modelId,omitempty"` Metadata *llmsdk.LanguageModelMetadata `json:"metadata,omitempty"`}

type baseUIPart struct { Type string `json:"type"`}

type textUIPart struct { Text string `json:"text"` State *string `json:"state,omitempty"` ProviderMetadata providerMetadata `json:"providerMetadata,omitempty"`}

type reasoningUIPart struct { Text string `json:"text"` State *string `json:"state,omitempty"` ProviderMetadata providerMetadata `json:"providerMetadata,omitempty"`}

type fileUIPart struct { URL string `json:"url"` MediaType string `json:"mediaType"` Filename *string `json:"filename,omitempty"` ProviderMetadata providerMetadata `json:"providerMetadata,omitempty"`}

type dynamicToolUIPart struct { ToolName string `json:"toolName"` ToolCallID string `json:"toolCallId"` Input any `json:"input,omitempty"`}

type toolUIPart struct { State string `json:"state"` ToolCallID string `json:"toolCallId"` ToolName string `json:"toolName,omitempty"` Input any `json:"input,omitempty"` Output any `json:"output,omitempty"` ErrorText string `json:"errorText,omitempty"` ProviderMetadata providerMetadata `json:"providerMetadata,omitempty"` rawType string `json:"-"`}

func (p *toolUIPart) resolvedToolName() string { if p.ToolName != "" { return p.ToolName } return strings.TrimPrefix(p.rawType, "tool-")}

// ==== Agent setup ====

type chatContext struct{}

type timeTool struct{}

func (t *timeTool) Name() string { return "get_current_time"}

func (t *timeTool) Description() string { return "Get the current server time in ISO 8601 format."}

func (t *timeTool) Parameters() llmsdk.JSONSchema { return llmsdk.JSONSchema{ "type": "object", "properties": map[string]any{}, "additionalProperties": false, }}

func (t *timeTool) Execute(_ context.Context, _ json.RawMessage, _ chatContext, _ *llmagent.RunState) (llmagent.AgentToolResult, error) { now := time.Now().UTC().Format(time.RFC3339) return llmagent.AgentToolResult{ Content: []llmsdk.Part{llmsdk.NewTextPart(now)}, IsError: false, }, nil}

type weatherTool struct{}

func (t *weatherTool) Name() string { return "get_local_weather"}

func (t *weatherTool) Description() string { return "Return a lightweight weather forecast for a given city using mock data."}

func (t *weatherTool) Parameters() llmsdk.JSONSchema { return llmsdk.JSONSchema{ "type": "object", "properties": map[string]any{ "location": map[string]any{ "type": "string", "description": "City name to look up weather for.", }, }, "required": []string{"location"}, "additionalProperties": false, }}

type weatherParams struct { Location string `json:"location"`}

func (t *weatherTool) Execute(_ context.Context, paramsJSON json.RawMessage, _ chatContext, _ *llmagent.RunState) (llmagent.AgentToolResult, error) { var params weatherParams if len(paramsJSON) > 0 { if err := json.Unmarshal(paramsJSON, ¶ms); err != nil { return llmagent.AgentToolResult{}, err } }

location := strings.TrimSpace(params.Location) conditions := []string{"sunny", "cloudy", "rainy", "breezy"} condition := conditions[len(location)%len(conditions)] result := map[string]any{ "location": location, "condition": condition, "temperatureC": 18 + (len(location) % 10), }

payload, err := json.Marshal(result) if err != nil { return llmagent.AgentToolResult{}, err }

return llmagent.AgentToolResult{ Content: []llmsdk.Part{llmsdk.NewTextPart(string(payload))}, IsError: false, }, nil}

func createAgent(provider, modelID string, metadata llmsdk.LanguageModelMetadata) *llmagent.Agent[chatContext] { model, err := examples.GetModel(provider, modelID, metadata, "") if err != nil { panic(err) }

instruction1 := "You are an assistant orchestrated by the llm-agent SDK." instruction2 := "Use the available tools when they can provide better answers."

return llmagent.NewAgent("UIExampleAgent", model, llmagent.WithInstructions( llmagent.InstructionParam[chatContext]{String: &instruction1}, llmagent.InstructionParam[chatContext]{String: &instruction2}, ), llmagent.WithTools(&timeTool{}, &weatherTool{}), )}

// ==== Streaming helpers ====

type textStreamState struct { id string}

type reasoningStreamState struct { id string}

type toolCallStreamState struct { toolCallID string toolName string argsBuilder strings.Builder didEmitStart bool}

type sseWriter struct { w http.ResponseWriter flusher http.Flusher mu sync.Mutex}

// newSSEWriter wraps the ResponseWriter with helpers for emitting Server-Sent// Events. The Vercel AI SDK data stream protocol uses SSE, so isolating the// transport details keeps the adapter focused on payload translation.func newSSEWriter(w http.ResponseWriter) (*sseWriter, error) { flusher, ok := w.(http.Flusher) if !ok { return nil, errors.New("streaming unsupported by response writer") } return &sseWriter{w: w, flusher: flusher}, nil}

func (w *sseWriter) Write(event any) error { payload, err := json.Marshal(event) if err != nil { return err }

w.mu.Lock() defer w.mu.Unlock()

if _, err := w.w.Write([]byte("data: ")); err != nil { return err } if _, err := w.w.Write(payload); err != nil { return err } if _, err := w.w.Write([]byte("\n\n")); err != nil { return err } w.flusher.Flush() return nil}

func (w *sseWriter) Close() error { w.mu.Lock() defer w.mu.Unlock()

if _, err := w.w.Write([]byte("data: [DONE]\n\n")); err != nil { return err } w.flusher.Flush() return nil}

type startChunk struct { MessageID string `json:"messageId,omitempty"`}

func (c startChunk) MarshalJSON() ([]byte, error) { type alias struct { Type string `json:"type"` MessageID string `json:"messageId,omitempty"` } return json.Marshal(alias{Type: "start", MessageID: c.MessageID})}

type startStepChunk struct{}

func (startStepChunk) MarshalJSON() ([]byte, error) { return json.Marshal(struct { Type string `json:"type"` }{Type: "start-step"})}

type finishStepChunk struct{}

func (finishStepChunk) MarshalJSON() ([]byte, error) { return json.Marshal(struct { Type string `json:"type"` }{Type: "finish-step"})}

type textStartChunk struct { ID string `json:"id"`}

func (c textStartChunk) MarshalJSON() ([]byte, error) { type alias struct { Type string `json:"type"` ID string `json:"id"` } return json.Marshal(alias{Type: "text-start", ID: c.ID})}

type textDeltaChunk struct { ID string `json:"id"` Delta string `json:"delta"`}

func (c textDeltaChunk) MarshalJSON() ([]byte, error) { type alias struct { Type string `json:"type"` ID string `json:"id"` Delta string `json:"delta"` } return json.Marshal(alias{Type: "text-delta", ID: c.ID, Delta: c.Delta})}

type textEndChunk struct { ID string `json:"id"`}

func (c textEndChunk) MarshalJSON() ([]byte, error) { type alias struct { Type string `json:"type"` ID string `json:"id"` } return json.Marshal(alias{Type: "text-end", ID: c.ID})}

type reasoningStartChunk struct { ID string `json:"id"`}

func (c reasoningStartChunk) MarshalJSON() ([]byte, error) { type alias struct { Type string `json:"type"` ID string `json:"id"` } return json.Marshal(alias{Type: "reasoning-start", ID: c.ID})}

type reasoningDeltaChunk struct { ID string `json:"id"` Delta string `json:"delta"`}

func (c reasoningDeltaChunk) MarshalJSON() ([]byte, error) { type alias struct { Type string `json:"type"` ID string `json:"id"` Delta string `json:"delta"` } return json.Marshal(alias{Type: "reasoning-delta", ID: c.ID, Delta: c.Delta})}

type reasoningEndChunk struct { ID string `json:"id"`}

func (c reasoningEndChunk) MarshalJSON() ([]byte, error) { type alias struct { Type string `json:"type"` ID string `json:"id"` } return json.Marshal(alias{Type: "reasoning-end", ID: c.ID})}

type toolInputStartChunk struct { ToolCallID string `json:"toolCallId"` ToolName string `json:"toolName"`}

func (c toolInputStartChunk) MarshalJSON() ([]byte, error) { type alias struct { Type string `json:"type"` ToolCallID string `json:"toolCallId"` ToolName string `json:"toolName"` } return json.Marshal(alias{ Type: "tool-input-start", ToolCallID: c.ToolCallID, ToolName: c.ToolName, })}

type toolInputDeltaChunk struct { ToolCallID string `json:"toolCallId"` InputTextDelta string `json:"inputTextDelta"`}

func (c toolInputDeltaChunk) MarshalJSON() ([]byte, error) { type alias struct { Type string `json:"type"` ToolCallID string `json:"toolCallId"` InputTextDelta string `json:"inputTextDelta"` } return json.Marshal(alias{ Type: "tool-input-delta", ToolCallID: c.ToolCallID, InputTextDelta: c.InputTextDelta, })}

type toolInputAvailableChunk struct { ToolCallID string `json:"toolCallId"` ToolName string `json:"toolName"` Input any `json:"input"`}

func (c toolInputAvailableChunk) MarshalJSON() ([]byte, error) { type alias struct { Type string `json:"type"` ToolCallID string `json:"toolCallId"` ToolName string `json:"toolName"` Input any `json:"input"` } return json.Marshal(alias{ Type: "tool-input-available", ToolCallID: c.ToolCallID, ToolName: c.ToolName, Input: c.Input, })}

type toolOutputAvailableChunk struct { ToolCallID string `json:"toolCallId"` Output any `json:"output"`}

func (c toolOutputAvailableChunk) MarshalJSON() ([]byte, error) { type alias struct { Type string `json:"type"` ToolCallID string `json:"toolCallId"` Output any `json:"output"` } return json.Marshal(alias{ Type: "tool-output-available", ToolCallID: c.ToolCallID, Output: c.Output, })}

type errorChunk struct { ErrorText string `json:"errorText"`}

func (c errorChunk) MarshalJSON() ([]byte, error) { type alias struct { Type string `json:"type"` ErrorText string `json:"errorText"` } return json.Marshal(alias{Type: "error", ErrorText: c.ErrorText})}

type finishChunk struct{}

func (finishChunk) MarshalJSON() ([]byte, error) { return json.Marshal(struct { Type string `json:"type"` }{Type: "finish"})}

// dataStreamProtocolAdapter bridges AgentStreamEvent values to the Vercel AI// SDK data stream protocol. Feed every event emitted by Agent.RunStream into// Write so the frontend receives the expected stream chunks.type dataStreamProtocolAdapter struct { writer *sseWriter textStateMap map[int]textStreamState reasoningStateMap map[int]reasoningStreamState toolCallStateMap map[int]*toolCallStreamState stepStarted bool closed bool}

func newDataStreamProtocolAdapter(w http.ResponseWriter) (*dataStreamProtocolAdapter, error) { writer, err := newSSEWriter(w) if err != nil { return nil, err }

adapter := &dataStreamProtocolAdapter{ writer: writer, textStateMap: make(map[int]textStreamState), reasoningStateMap: make(map[int]reasoningStreamState), toolCallStateMap: make(map[int]*toolCallStreamState), }

messageID := "msg_" + uuid.NewString() if err := adapter.writer.Write(startChunk{MessageID: messageID}); err != nil { return nil, err }

return adapter, nil}

func (a *dataStreamProtocolAdapter) Write(event *llmagent.AgentStreamEvent) error { if a.closed { return nil }

switch { case event.Partial != nil: if event.Partial.Delta == nil { return nil } if err := a.ensureStepStarted(); err != nil { return err } return a.writeDelta(event.Partial.Delta) case event.Item != nil: if err := a.finishStep(); err != nil { return err } if event.Item.Item.Tool != nil { if err := a.ensureStepStarted(); err != nil { return err } if err := a.writeForToolItem(event.Item.Item.Tool); err != nil { return err } return a.finishStep() } case event.Response != nil: // Final agent response does not emit an extra stream part. return nil }

return nil}

func (a *dataStreamProtocolAdapter) EmitError(errorText string) error { if a.closed { return nil } return a.writer.Write(errorChunk{ErrorText: errorText})}

func (a *dataStreamProtocolAdapter) Close() error { if a.closed { return nil } if err := a.finishStep(); err != nil { return err } if err := a.writer.Write(finishChunk{}); err != nil { return err } if err := a.writer.Close(); err != nil { return err } a.closed = true return nil}

func (a *dataStreamProtocolAdapter) ensureStepStarted() error { if a.stepStarted { return nil } if err := a.writer.Write(startStepChunk{}); err != nil { return err } a.stepStarted = true return nil}

func (a *dataStreamProtocolAdapter) finishStep() error { if !a.stepStarted { return nil } if err := a.flushStates(); err != nil { return err } if err := a.writer.Write(finishStepChunk{}); err != nil { return err } a.stepStarted = false return nil}

func (a *dataStreamProtocolAdapter) flushStates() error { for index, state := range a.textStateMap { if err := a.writer.Write(textEndChunk{ID: state.id}); err != nil { return err } delete(a.textStateMap, index) }

for index, state := range a.reasoningStateMap { if err := a.writer.Write(reasoningEndChunk{ID: state.id}); err != nil { return err } delete(a.reasoningStateMap, index) }

for index, state := range a.toolCallStateMap { if state.toolCallID != "" && state.toolName != "" && state.argsBuilder.Len() > 0 { input := safeJSONParse(state.argsBuilder.String()) if err := a.writer.Write(toolInputAvailableChunk{ ToolCallID: state.toolCallID, ToolName: state.toolName, Input: input, }); err != nil { return err } } delete(a.toolCallStateMap, index) }

return nil}

func (a *dataStreamProtocolAdapter) writeDelta(delta *llmsdk.ContentDelta) error { switch { case delta.Part.TextPartDelta != nil: return a.writeForTextPartDelta(delta.Index, delta.Part.TextPartDelta) case delta.Part.ReasoningPartDelta != nil: return a.writeForReasoningPartDelta(delta.Index, delta.Part.ReasoningPartDelta) case delta.Part.ToolCallPartDelta != nil: return a.writeForToolCallPartDelta(delta.Index, delta.Part.ToolCallPartDelta) case delta.Part.AudioPartDelta != nil: return a.flushStates() case delta.Part.ImagePartDelta != nil: return a.flushStates() default: return nil }}

func (a *dataStreamProtocolAdapter) writeForTextPartDelta(index int, part *llmsdk.TextPartDelta) error { state, ok := a.textStateMap[index] if !ok { if err := a.flushStates(); err != nil { return err } state = textStreamState{id: "text_" + uuid.NewString()} a.textStateMap[index] = state if err := a.writer.Write(textStartChunk{ID: state.id}); err != nil { return err } }

return a.writer.Write(textDeltaChunk{ID: state.id, Delta: part.Text})}

func (a *dataStreamProtocolAdapter) writeForReasoningPartDelta(index int, part *llmsdk.ReasoningPartDelta) error { state, ok := a.reasoningStateMap[index] if !ok { if err := a.flushStates(); err != nil { return err } id := "reasoning_" + uuid.NewString() if part.ID != nil && *part.ID != "" { id = "reasoning_" + *part.ID } state = reasoningStreamState{id: id} a.reasoningStateMap[index] = state if err := a.writer.Write(reasoningStartChunk{ID: state.id}); err != nil { return err } }

return a.writer.Write(reasoningDeltaChunk{ID: state.id, Delta: part.Text})}

func (a *dataStreamProtocolAdapter) writeForToolCallPartDelta(index int, part *llmsdk.ToolCallPartDelta) error { state, ok := a.toolCallStateMap[index] if !ok { if err := a.flushStates(); err != nil { return err } state = &toolCallStreamState{} a.toolCallStateMap[index] = state }

if part.ToolCallID != nil && *part.ToolCallID != "" { state.toolCallID = *part.ToolCallID } if part.ToolName != nil && *part.ToolName != "" { state.toolName = *part.ToolName }

if !state.didEmitStart && state.toolCallID != "" && state.toolName != "" { state.didEmitStart = true if err := a.writer.Write(toolInputStartChunk{ ToolCallID: state.toolCallID, ToolName: state.toolName, }); err != nil { return err } }

if part.Args != nil && *part.Args != "" { state.argsBuilder.WriteString(*part.Args) return a.writer.Write(toolInputDeltaChunk{ ToolCallID: state.toolCallID, InputTextDelta: *part.Args, }) }

return nil}

func (a *dataStreamProtocolAdapter) writeForToolItem(item *llmagent.AgentItemTool) error { if err := a.flushStates(); err != nil { return err }

var textBuffer strings.Builder for _, part := range item.Output { if part.TextPart != nil { textBuffer.WriteString(part.TextPart.Text) } }

var output any if textBuffer.Len() > 0 { output = safeJSONParse(textBuffer.String()) } else { output = item.Output }

return a.writer.Write(toolOutputAvailableChunk{ ToolCallID: item.ToolCallID, Output: output, })}

// ==== Adapter layers ====

// uiPartToParts converts UI message parts produced by the Vercel AI SDK back// into llm-sdk Part values so the agent can reconstruct history, tool calls,// and intermediate reasoning steps.func uiPartToParts(part uiPart) []llmsdk.Part { switch { case part.Text != nil: return []llmsdk.Part{llmsdk.NewTextPart(part.Text.Text)} case part.Reasoning != nil: return []llmsdk.Part{llmsdk.NewReasoningPart(part.Reasoning.Text)} case part.DynamicTool != nil: if part.DynamicTool.ToolCallID == "" || part.DynamicTool.ToolName == "" { return nil } return []llmsdk.Part{llmsdk.NewToolCallPart(part.DynamicTool.ToolCallID, part.DynamicTool.ToolName, part.DynamicTool.Input)} case part.File != nil: return convertFilePart(part.File) case part.Tool != nil: return convertToolPart(part.Tool) default: return nil }}

func convertFilePart(part *fileUIPart) []llmsdk.Part { data := extractDataPayload(part.URL) switch { case strings.HasPrefix(part.MediaType, "image/"): imagePart := llmsdk.NewImagePart(data, part.MediaType) return []llmsdk.Part{imagePart} case strings.HasPrefix(part.MediaType, "audio/"): format, err := partutil.MapMimeTypeToAudioFormat(part.MediaType) if err != nil { return nil } return []llmsdk.Part{llmsdk.NewAudioPart(data, format)} case strings.HasPrefix(part.MediaType, "text/"): decoded, err := base64.StdEncoding.DecodeString(data) if err != nil { return nil } return []llmsdk.Part{llmsdk.NewTextPart(string(decoded))} default: return nil }}

func convertToolPart(part *toolUIPart) []llmsdk.Part { name := part.resolvedToolName() if part.ToolCallID == "" || name == "" { return nil } switch part.State { case "input-available": return []llmsdk.Part{llmsdk.NewToolCallPart(part.ToolCallID, name, part.Input)} case "output-available": call := llmsdk.NewToolCallPart(part.ToolCallID, name, part.Input) result := llmsdk.NewToolResultPart(part.ToolCallID, name, []llmsdk.Part{ llmsdk.NewTextPart(safeJSONMarshal(part.Output)), }) return []llmsdk.Part{call, result} case "output-error": call := llmsdk.NewToolCallPart(part.ToolCallID, name, part.Input) result := llmsdk.NewToolResultPart(part.ToolCallID, name, []llmsdk.Part{ llmsdk.NewTextPart(part.ErrorText), }) return []llmsdk.Part{call, result} default: return nil }}

func uiMessagesToMessages(messages []uiMessage) ([]llmsdk.Message, error) { history := make([]llmsdk.Message, 0, len(messages))

for _, message := range messages { switch message.Role { case uiRoleUser: var parts []llmsdk.Part for _, part := range message.Parts { parts = append(parts, uiPartToParts(part)...) } if len(parts) == 0 { continue } history = append(history, llmsdk.NewUserMessage(parts...)) case uiRoleAssistant: for _, part := range message.Parts { for _, converted := range uiPartToParts(part) { switch converted.Type() { case llmsdk.PartTypeText, llmsdk.PartTypeReasoning, llmsdk.PartTypeAudio, llmsdk.PartTypeImage, llmsdk.PartTypeToolCall: appendAssistantMessage(&history, converted) case llmsdk.PartTypeToolResult: appendToolMessage(&history, converted) } } } default: // ignore unsupported roles } }

return history, nil}

func appendAssistantMessage(history *[]llmsdk.Message, part llmsdk.Part) { n := len(*history) if n > 0 { last := &(*history)[n-1] if msg := last.AssistantMessage; msg != nil { msg.Content = append(msg.Content, part) return } if last.ToolMessage != nil && n >= 2 { prev := &(*history)[n-2] if msg := prev.AssistantMessage; msg != nil { msg.Content = append(msg.Content, part) return } } }

*history = append(*history, llmsdk.NewAssistantMessage(part))}

func appendToolMessage(history *[]llmsdk.Message, part llmsdk.Part) { n := len(*history) if n > 0 { last := &(*history)[n-1] if msg := last.ToolMessage; msg != nil { msg.Content = append(msg.Content, part) return } }

*history = append(*history, llmsdk.NewToolMessage(part))}

// ==== HTTP handlers ====

func handleChatRequest(w http.ResponseWriter, r *http.Request) { w.Header().Set("Access-Control-Allow-Origin", "*") w.Header().Set("Access-Control-Allow-Headers", "content-type")

if r.Method == http.MethodOptions { w.WriteHeader(http.StatusNoContent) return }

if r.Method != http.MethodPost { http.Error(w, "method not allowed", http.StatusMethodNotAllowed) return }

bodyBytes, err := readRequestBody(r) if err != nil { http.Error(w, err.Error(), http.StatusBadRequest) return }

var body chatRequestBody if err := json.Unmarshal(bodyBytes, &body); err != nil { http.Error(w, fmt.Sprintf("invalid request body: %v", err), http.StatusBadRequest) return }

provider := body.Provider if provider == "" { provider = "openai" } modelID := body.ModelID if modelID == "" { modelID = "gpt-4o-mini" }

var metadata llmsdk.LanguageModelMetadata if body.Metadata != nil { metadata = *body.Metadata }

agent := createAgent(provider, modelID, metadata)

messages, err := uiMessagesToMessages(body.Messages) if err != nil { http.Error(w, fmt.Sprintf("invalid messages payload: %v", err), http.StatusBadRequest) return } items := make([]llmagent.AgentItem, 0, len(messages)) for _, message := range messages { items = append(items, llmagent.NewAgentItemMessage(message)) }

w.Header().Set("Content-Type", "text/event-stream") w.Header().Set("Cache-Control", "no-cache, no-transform") w.Header().Set("Connection", "keep-alive") w.Header().Set("x-vercel-ai-ui-message-stream", "v1") w.WriteHeader(http.StatusOK)

adapter, err := newDataStreamProtocolAdapter(w) if err != nil { http.Error(w, err.Error(), http.StatusInternalServerError) return }

ctx, cancel := context.WithCancel(r.Context()) defer cancel()

stream, err := agent.RunStream(ctx, llmagent.AgentRequest[chatContext]{ Input: items, Context: chatContext{}, }) if err != nil { http.Error(w, err.Error(), http.StatusInternalServerError) return }

clientClosed := false

for stream.Next() { event := stream.Current() if err := adapter.Write(event); err != nil { clientClosed = true cancel() break } if ctx.Err() != nil { clientClosed = true break } }

if streamErr := stream.Err(); streamErr != nil && !errors.Is(streamErr, context.Canceled) { if err := adapter.EmitError(streamErr.Error()); err != nil { log.Printf("ai-sdk-ui: failed to emit error chunk: %v", err) } }

if !clientClosed { if err := adapter.Close(); err != nil { log.Printf("ai-sdk-ui: failed to close stream: %v", err) } }}

func readRequestBody(r *http.Request) ([]byte, error) { defer r.Body.Close() return io.ReadAll(r.Body)}

// ==== Utility helpers ====

// safeJSONParse attempts to decode tool arguments or results as JSON. When a// payload is not valid JSON we fall back to the original string so the UI can// still surface something meaningful to the user.func safeJSONParse(raw string) any { if strings.TrimSpace(raw) == "" { return raw }

decoder := json.NewDecoder(strings.NewReader(raw)) decoder.UseNumber()

var value any if err := decoder.Decode(&value); err != nil { return raw } if decoder.More() { return raw } return value}

func safeJSONMarshal(value any) string { if value == nil { return "null" } data, err := json.Marshal(value) if err != nil { return fmt.Sprintf("%v", value) } return string(data)}

func extractDataPayload(dataURL string) string { if idx := strings.Index(dataURL, ","); idx != -1 { return dataURL[idx+1:] } return dataURL}

// ==== Server bootstrap ====

func main() { mux := http.NewServeMux() mux.HandleFunc("/api/chat", handleChatRequest) mux.HandleFunc("/", func(w http.ResponseWriter, r *http.Request) { w.Header().Set("Content-Type", "application/json") w.WriteHeader(http.StatusNotFound) _, _ = w.Write([]byte(`{"error":"Not found"}`)) })

port := "8000" log.Printf("AI SDK UI example server listening on http://localhost:%s", port) if err := http.ListenAndServe(":"+port, mux); err != nil { log.Fatal(err) }}use async_stream::stream;use axum::{ body::Body, extract::Json, http::{HeaderValue, Response, StatusCode}, response::{sse::Event, IntoResponse, Sse}, routing::{get, post}, Router,};use base64::{engine::general_purpose::STANDARD as BASE64_STANDARD, Engine};use dotenvy::dotenv;use futures::{future::BoxFuture, StreamExt};use llm_agent::{Agent, AgentItem, AgentRequest, AgentTool, AgentToolResult, BoxedError, RunState};use llm_sdk::{ AudioFormat, LanguageModelMetadata, Message, Part, PartDelta, PartialModelResponse, ToolResultPart,};use serde::{de, Deserialize, Serialize};use serde_json::{json, Value};use std::{ collections::HashMap, convert::Infallible, error::Error, mem, time::{Duration, SystemTime, UNIX_EPOCH},};use tokio::net::TcpListener;

mod common;

// ==== Vercel AI SDK types ====

#[derive(Debug, Clone, Copy, Deserialize, PartialEq, Eq)]#[serde(rename_all = "lowercase")]enum UIMessageRole { System, User, Assistant, #[serde(other)] Unknown,}

#[derive(Debug, Deserialize)]#[serde(rename_all = "camelCase")]struct UIMessage { id: Option<String>, role: UIMessageRole, #[serde(default)] parts: Vec<UIMessagePart>, #[serde(default)] metadata: Option<Value>,}

#[derive(Debug, Deserialize)]#[serde(rename_all = "camelCase")]struct ChatRequestBody { id: Option<String>, trigger: Option<String>, message_id: Option<String>, #[serde(default)] messages: Vec<UIMessage>, provider: Option<String>, model_id: Option<String>, metadata: Option<LanguageModelMetadata>,}

#[derive(Debug)]enum UIMessagePart { Text(TextUIPart), Reasoning(ReasoningUIPart), DynamicTool(DynamicToolUIPart), Tool(ToolUIPart), File(FileUIPart), Unknown,}

#[derive(Debug, Deserialize)]struct TextUIPart { text: String,}

#[derive(Debug, Deserialize)]struct ReasoningUIPart { text: String,}

#[derive(Debug, Deserialize)]#[serde(rename_all = "camelCase")]struct DynamicToolUIPart { tool_name: String, tool_call_id: String, input: Option<Value>,}

#[derive(Debug, Deserialize)]#[serde(rename_all = "camelCase")]struct ToolUIPart { #[serde(skip)] type_tag: String, state: String, tool_call_id: String, tool_name: Option<String>, input: Option<Value>, output: Option<Value>, error_text: Option<String>,}

#[derive(Debug, Deserialize)]#[serde(rename_all = "camelCase")]struct FileUIPart { url: String, media_type: String, #[serde(default)] filename: Option<String>,}

impl<'de> Deserialize<'de> for UIMessagePart { fn deserialize<D>(deserializer: D) -> Result<Self, D::Error> where D: de::Deserializer<'de>, { let value = Value::deserialize(deserializer)?; let type_str = value .get("type") .and_then(Value::as_str) .ok_or_else(|| de::Error::missing_field("type"))?; match type_str { "text" => { let part: TextUIPart = serde_json::from_value(value).map_err(de::Error::custom)?; Ok(Self::Text(part)) } "reasoning" => { let part: ReasoningUIPart = serde_json::from_value(value).map_err(de::Error::custom)?; Ok(Self::Reasoning(part)) } "dynamic-tool" => { let part: DynamicToolUIPart = serde_json::from_value(value).map_err(de::Error::custom)?; Ok(Self::DynamicTool(part)) } "file" => { let part: FileUIPart = serde_json::from_value(value).map_err(de::Error::custom)?; Ok(Self::File(part)) } _ if type_str.starts_with("tool-") => { let mut part: ToolUIPart = serde_json::from_value(value.clone()).map_err(de::Error::custom)?; part.type_tag = type_str.to_string(); if part.tool_name.is_none() { let candidate = type_str.trim_start_matches("tool-"); if !candidate.is_empty() { part.tool_name = Some(candidate.to_string()); } } Ok(Self::Tool(part)) } _ => Ok(Self::Unknown), } }}

impl ToolUIPart { fn resolved_tool_name(&self) -> Option<&str> { if let Some(name) = &self.tool_name { if !name.is_empty() { return Some(name); } } let derived = self.type_tag.trim_start_matches("tool-"); if derived.is_empty() { None } else { Some(derived) } }}

// ==== Agent setup ====

#[derive(Clone, Default)]struct ChatContext;

struct TimeTool;

impl AgentTool<ChatContext> for TimeTool { fn name(&self) -> String { "get_current_time".to_string() }

fn description(&self) -> String { "Get the current server time in ISO 8601 format.".to_string() }

fn parameters(&self) -> Value { json!({ "type": "object", "properties": {}, "additionalProperties": false }) }

fn execute<'a>( &'a self, _args: Value, _context: &'a ChatContext, _state: &'a RunState, ) -> BoxFuture<'a, Result<AgentToolResult, Box<dyn Error + Send + Sync>>> { Box::pin(async move { let now = chrono::Utc::now().to_rfc3339(); Ok(AgentToolResult { content: vec![Part::text(now)], is_error: false, }) }) }}

#[derive(Debug, Deserialize)]struct WeatherParams { location: String,}

struct WeatherTool;

impl AgentTool<ChatContext> for WeatherTool { fn name(&self) -> String { "get_local_weather".to_string() }

fn description(&self) -> String { "Return a lightweight weather forecast for a given city using mock data.".to_string() }

fn parameters(&self) -> Value { json!({ "type": "object", "properties": { "location": { "type": "string", "description": "City name to look up weather for." } }, "required": ["location"], "additionalProperties": false }) }

fn execute<'a>( &'a self, args: Value, _context: &'a ChatContext, _state: &'a RunState, ) -> BoxFuture<'a, Result<AgentToolResult, Box<dyn Error + Send + Sync>>> { Box::pin(async move { let params: WeatherParams = serde_json::from_value(args)?; let trimmed = params.location.trim(); let conditions = ["sunny", "cloudy", "rainy", "breezy"]; let condition = conditions[trimmed.len() % conditions.len()]; let payload = json!({ "location": trimmed, "condition": condition, "temperatureC": 18 + (trimmed.len() % 10), }); Ok(AgentToolResult { content: vec![Part::text(payload.to_string())], is_error: false, }) }) }}

fn create_agent( provider: &str, model_id: &str, metadata: LanguageModelMetadata,) -> Result<Agent<ChatContext>, BoxedError> { let model = common::get_model(provider, model_id, metadata, None)?; Ok(Agent::<ChatContext>::builder("UIExampleAgent", model) .add_instruction("You are an assistant orchestrated by the llm-agent SDK.") .add_instruction("Use the available tools when they can provide better answers.") .add_tool(TimeTool) .add_tool(WeatherTool) .build())}

// ==== Streaming adapter ====

#[derive(Debug, Serialize)]#[serde(tag = "type", rename_all = "kebab-case")]enum UIMessageChunk { #[serde(rename_all = "camelCase")] Start { #[serde(skip_serializing_if = "Option::is_none")] message_id: Option<String>, #[serde(skip_serializing_if = "Option::is_none")] message_metadata: Option<Value>, }, #[serde(rename_all = "camelCase")] Finish { #[serde(skip_serializing_if = "Option::is_none")] message_metadata: Option<Value>, }, StartStep, FinishStep, #[serde(rename_all = "camelCase")] TextStart { id: String, }, #[serde(rename_all = "camelCase")] TextDelta { id: String, delta: String, }, #[serde(rename_all = "camelCase")] TextEnd { id: String, }, #[serde(rename_all = "camelCase")] ReasoningStart { id: String, }, #[serde(rename_all = "camelCase")] ReasoningDelta { id: String, delta: String, }, #[serde(rename_all = "camelCase")] ReasoningEnd { id: String, }, #[serde(rename_all = "camelCase")] ToolInputStart { tool_call_id: String, tool_name: String, }, #[serde(rename_all = "camelCase")] ToolInputDelta { tool_call_id: String, input_text_delta: String, }, #[serde(rename_all = "camelCase")] ToolInputAvailable { tool_call_id: String, tool_name: String, input: Value, }, #[serde(rename_all = "camelCase")] ToolOutputAvailable { tool_call_id: String, output: Value, }, #[serde(rename_all = "camelCase")] Error { error_text: String, },}

impl UIMessageChunk { fn new_start(message_id: Option<String>, message_metadata: Option<Value>) -> Self { Self::Start { message_id, message_metadata, } }

fn new_finish(message_metadata: Option<Value>) -> Self { Self::Finish { message_metadata } }

fn to_json_string(&self) -> String { serde_json::to_string(self).unwrap_or_else(|_| "{}".to_string()) }}

struct ToolCallStreamState { tool_call_id: Option<String>, tool_name: Option<String>, args_buffer: String, did_emit_start: bool,}

impl ToolCallStreamState { fn new() -> Self { Self { tool_call_id: None, tool_name: None, args_buffer: String::new(), did_emit_start: false, } }}

/// Bridges `AgentStreamEvent`s back into the Vercel AI SDK data stream/// protocol. Feed every event from `Agent::run_stream` into `handle_event`/// so the UI receives the expected chunks.struct DataStreamProtocolAdapter { message_id: String, text_state: HashMap<usize, String>, reasoning_state: HashMap<usize, String>, tool_call_state: HashMap<usize, ToolCallStreamState>, text_counter: usize, reasoning_counter: usize, step_started: bool, closed: bool,}

impl DataStreamProtocolAdapter { fn new() -> (Self, UIMessageChunk) { let nanos = SystemTime::now() .duration_since(UNIX_EPOCH) .unwrap_or_default() .as_nanos(); let adapter = Self { message_id: format!("msg_{nanos}"), text_state: HashMap::new(), reasoning_state: HashMap::new(), tool_call_state: HashMap::new(), text_counter: 0, reasoning_counter: 0, step_started: false, closed: false, }; let start = UIMessageChunk::new_start(Some(adapter.message_id.clone()), None); (adapter, start) }

fn allocate_text_id(&mut self) -> String { self.text_counter += 1; format!("text_{}", self.text_counter) }

fn allocate_reasoning_id(&mut self, provided: Option<&str>) -> String { if let Some(id) = provided { if !id.is_empty() { return format!("reasoning_{id}"); } } self.reasoning_counter += 1; format!("reasoning_{}", self.reasoning_counter) }

fn ensure_step_started(&mut self) -> Option<UIMessageChunk> { if self.step_started { return None; } self.step_started = true; Some(UIMessageChunk::StartStep) }

fn finish_step(&mut self) -> Vec<UIMessageChunk> { if !self.step_started { return Vec::new(); } let mut events = self.flush_states(); events.push(UIMessageChunk::FinishStep); self.step_started = false; events }

fn flush_states(&mut self) -> Vec<UIMessageChunk> { let mut events = Vec::new();

for state_id in mem::take(&mut self.text_state).into_values() { events.push(UIMessageChunk::TextEnd { id: state_id }); }

for state_id in mem::take(&mut self.reasoning_state).into_values() { events.push(UIMessageChunk::ReasoningEnd { id: state_id }); }

for state in mem::take(&mut self.tool_call_state).into_values() { if let (Some(tool_call_id), Some(tool_name)) = (state.tool_call_id, state.tool_name) { if !state.args_buffer.is_empty() { let input = safe_json_parse(&state.args_buffer); events.push(UIMessageChunk::ToolInputAvailable { tool_call_id, tool_name, input, }); } } }

events }

fn handle_event(&mut self, event: &llm_agent::AgentStreamEvent) -> Vec<UIMessageChunk> { match event { llm_agent::AgentStreamEvent::Partial(PartialModelResponse { delta: Some(delta), .. }) => { let mut events = Vec::new(); if let Some(start) = self.ensure_step_started() { events.push(start); } events.extend(self.write_delta(delta)); events } llm_agent::AgentStreamEvent::Partial(_) => Vec::new(), llm_agent::AgentStreamEvent::Item(item_event) => { let mut events = self.finish_step(); if let AgentItem::Tool(tool) = &item_event.item { if let Some(start) = self.ensure_step_started() { events.push(start); } events.extend(self.write_for_tool_item(tool)); events.extend(self.finish_step()); } events } llm_agent::AgentStreamEvent::Response(_) => Vec::new(), } }

fn write_delta(&mut self, delta: &llm_sdk::ContentDelta) -> Vec<UIMessageChunk> { match &delta.part { PartDelta::Text(text_delta) => { self.write_for_text_part(delta.index, text_delta.text.clone()) } PartDelta::Reasoning(reasoning_delta) => self.write_for_reasoning_part( delta.index, reasoning_delta.text.clone().unwrap_or_default(), reasoning_delta.id.clone(), ), PartDelta::ToolCall(tool_delta) => { self.write_for_tool_call_part(delta.index, tool_delta) } PartDelta::Audio(_) | PartDelta::Image(_) => self.flush_states(), } }

fn write_for_text_part(&mut self, index: usize, text: String) -> Vec<UIMessageChunk> { let mut events = Vec::new(); let id = if let Some(existing) = self.text_state.get(&index) { existing.clone() } else { let identifier = self.allocate_text_id(); self.text_state.insert(index, identifier.clone()); events.push(UIMessageChunk::TextStart { id: identifier.clone(), }); identifier };

events.push(UIMessageChunk::TextDelta { id, delta: text }); events }

fn write_for_reasoning_part( &mut self, index: usize, text: String, id: Option<String>, ) -> Vec<UIMessageChunk> { let mut events = Vec::new(); let identifier = if let Some(existing) = self.reasoning_state.get(&index) { existing.clone() } else { let identifier = self.allocate_reasoning_id(id.as_deref()); self.reasoning_state.insert(index, identifier.clone()); events.push(UIMessageChunk::ReasoningStart { id: identifier.clone(), }); identifier };

events.push(UIMessageChunk::ReasoningDelta { id: identifier, delta: text, }); events }

fn write_for_tool_call_part( &mut self, index: usize, part: &llm_sdk::ToolCallPartDelta, ) -> Vec<UIMessageChunk> { let mut events = Vec::new(); if !self.tool_call_state.contains_key(&index) { events.extend(self.flush_states()); self.tool_call_state .insert(index, ToolCallStreamState::new()); } let state = self.tool_call_state.get_mut(&index).expect("tool state");

if let Some(tool_call_id) = &part.tool_call_id { state.tool_call_id = Some(tool_call_id.clone()); } if let Some(tool_name) = &part.tool_name { state.tool_name = Some(tool_name.clone()); }

if !state.did_emit_start { if let (Some(tool_call_id), Some(tool_name)) = (&state.tool_call_id, &state.tool_name) { state.did_emit_start = true; events.push(UIMessageChunk::ToolInputStart { tool_call_id: tool_call_id.clone(), tool_name: tool_name.clone(), }); } }

if let Some(args_chunk) = &part.args { state.args_buffer.push_str(args_chunk); if let Some(tool_call_id) = &state.tool_call_id { events.push(UIMessageChunk::ToolInputDelta { tool_call_id: tool_call_id.clone(), input_text_delta: args_chunk.clone(), }); } }

events }

fn write_for_tool_item(&mut self, item: &llm_agent::AgentItemTool) -> Vec<UIMessageChunk> { let mut events = self.flush_states();

let mut text_buffer = String::new(); for part in &item.output { if let Part::Text(text_part) = part { text_buffer.push_str(&text_part.text); } }

let output = if text_buffer.is_empty() { serde_json::to_value(&item.output).unwrap_or(Value::Null) } else { safe_json_parse(&text_buffer) };

events.push(UIMessageChunk::ToolOutputAvailable { tool_call_id: item.tool_call_id.clone(), output, });

events }

fn emit_error(&self, error_text: &str) -> UIMessageChunk { UIMessageChunk::Error { error_text: error_text.to_string(), } }

fn finish(&mut self) -> Vec<UIMessageChunk> { if self.closed { return Vec::new(); } let mut events = self.finish_step(); events.push(UIMessageChunk::new_finish(None)); self.closed = true; events }}

// ==== Adapter helpers ====

fn convert_file_part(part: &FileUIPart) -> Result<Vec<Part>, String> { let data = extract_data_payload(&part.url); if part.media_type.starts_with("image/") { Ok(vec![Part::image(data, &part.media_type)]) } else if part.media_type.starts_with("audio/") { if let Some(format) = map_mime_type_to_audio_format(&part.media_type) { Ok(vec![Part::audio(data, format)]) } else { Ok(Vec::new()) } } else if part.media_type.starts_with("text/") { let decoded = BASE64_STANDARD .decode(data.as_bytes()) .map_err(|err| format!("Failed to decode text data: {err}"))?; let text = String::from_utf8(decoded) .map_err(|err| format!("Invalid UTF-8 text payload: {err}"))?; Ok(vec![Part::text(text)]) } else { Ok(Vec::new()) }}

fn convert_tool_part(part: &ToolUIPart) -> Result<Vec<Part>, String> { let tool_name = part .resolved_tool_name() .ok_or_else(|| "Missing tool name".to_string())? .to_string(); match part.state.as_str() { "input-available" => Ok(vec![Part::tool_call( &part.tool_call_id, &tool_name, part.input.clone().unwrap_or(Value::Null), )]), "output-available" => { let call = Part::tool_call( &part.tool_call_id, &tool_name, part.input.clone().unwrap_or(Value::Null), ); let output_text = serde_json::to_string(&part.output).unwrap_or_else(|_| "null".to_string()); let result: Part = ToolResultPart::new( &part.tool_call_id, &tool_name, vec![Part::text(output_text)], ) .into(); Ok(vec![call, result]) } "output-error" => { let call = Part::tool_call( &part.tool_call_id, &tool_name, part.input.clone().unwrap_or(Value::Null), ); let result: Part = ToolResultPart::new( &part.tool_call_id, &tool_name, vec![Part::text(part.error_text.clone().unwrap_or_default())], ) .with_is_error(true) .into(); Ok(vec![call, result]) } _ => Ok(Vec::new()), }}

fn ui_message_part_to_parts(part: &UIMessagePart) -> Result<Vec<Part>, String> { match part { UIMessagePart::Text(part) => Ok(vec![Part::text(&part.text)]), UIMessagePart::Reasoning(part) => Ok(vec![Part::reasoning(part.text.clone())]), UIMessagePart::DynamicTool(part) => Ok(vec![Part::tool_call( &part.tool_call_id, &part.tool_name, part.input.clone().unwrap_or(Value::Null), )]), UIMessagePart::File(part) => convert_file_part(part), UIMessagePart::Tool(part) => convert_tool_part(part), UIMessagePart::Unknown => Ok(Vec::new()), }}

fn ui_messages_to_messages(messages: &[UIMessage]) -> Result<Vec<Message>, String> { let mut history = Vec::new();

for message in messages { match message.role { UIMessageRole::User => { let mut parts = Vec::new(); for part in &message.parts { parts.extend(ui_message_part_to_parts(part)?); } if !parts.is_empty() { history.push(Message::user(parts)); } } UIMessageRole::Assistant => { for part in &message.parts { for converted in ui_message_part_to_parts(part)? { match converted { Part::Text(_) | Part::Reasoning(_) | Part::Audio(_) | Part::Image(_) | Part::ToolCall(_) => { append_assistant_message(&mut history, converted) } Part::ToolResult(_) => append_tool_message(&mut history, converted), Part::Source(_) => {} } } } } UIMessageRole::System | UIMessageRole::Unknown => {} } }

Ok(history)}

fn append_assistant_message(history: &mut Vec<Message>, part: Part) { if let Some(Message::Assistant(assistant)) = history.last_mut() { assistant.content.push(part); return; }

if history.len() >= 2 { let last_index = history.len() - 1; let last_is_tool = matches!(history[last_index], Message::Tool(_)); if last_is_tool { if let Some(Message::Assistant(assistant)) = history.get_mut(last_index - 1) { assistant.content.push(part); return; } } }

history.push(Message::assistant(vec![part]));}

fn append_tool_message(history: &mut Vec<Message>, part: Part) { if let Some(Message::Tool(tool)) = history.last_mut() { tool.content.push(part); return; }

history.push(Message::tool(vec![part]));}

/// Attempts to parse tool arguments or results as JSON. When decoding fails we/// fall back to the original string so the UI can still render the payload.fn safe_json_parse(raw: &str) -> Value { serde_json::from_str(raw).unwrap_or_else(|_| Value::String(raw.to_string()))}

fn map_mime_type_to_audio_format(mime_type: &str) -> Option<AudioFormat> { let normalized = mime_type.split(';').next().unwrap_or(mime_type).trim(); match normalized { "audio/wav" => Some(AudioFormat::Wav), "audio/L16" | "audio/l16" => Some(AudioFormat::Linear16), "audio/flac" => Some(AudioFormat::Flac), "audio/basic" => Some(AudioFormat::Mulaw), "audio/mpeg" => Some(AudioFormat::Mp3), "audio/ogg" | "audio/ogg;codecs=\"opus\"" | "audio/ogg; codecs=\"opus\"" => { Some(AudioFormat::Opus) } "audio/aac" => Some(AudioFormat::Aac), _ => None, }}

fn extract_data_payload(url: &str) -> String { url.split_once(',') .map(|(_, data)| data.to_string()) .unwrap_or_else(|| url.to_string())}

// ==== HTTP handlers ====

async fn chat_handler( Json(body): Json<ChatRequestBody>,) -> Result<Response<Body>, (StatusCode, String)> { let provider = body.provider.unwrap_or_else(|| "openai".to_string()); let model_id = body.model_id.unwrap_or_else(|| "gpt-4o-mini".to_string()); let metadata = body.metadata.unwrap_or_default();

let agent = create_agent(&provider, &model_id, metadata) .map_err(|err| (StatusCode::INTERNAL_SERVER_ERROR, err.to_string()))?;

let history = ui_messages_to_messages(&body.messages).map_err(|err| (StatusCode::BAD_REQUEST, err))?; let mut items = Vec::with_capacity(history.len()); for message in history { items.push(AgentItem::Message(message)); }

let mut stream = agent .run_stream(AgentRequest { input: items, context: ChatContext, }) .await .map_err(|err| (StatusCode::INTERNAL_SERVER_ERROR, err.to_string()))?;

let event_stream = stream! { let (mut adapter, start_event) = DataStreamProtocolAdapter::new(); yield Ok::<Event, Infallible>(Event::default().data(start_event.to_json_string()));

while let Some(event_result) = stream.next().await { match event_result { Ok(event) => { for payload in adapter.handle_event(&event) { yield Ok::<Event, Infallible>( Event::default().data(payload.to_json_string()), ); } } Err(err) => { let error_event = adapter.emit_error(&err.to_string()); yield Ok::<Event, Infallible>( Event::default().data(error_event.to_json_string()), ); break; } } }

for payload in adapter.finish() { yield Ok::<Event, Infallible>(Event::default().data(payload.to_json_string())); }

yield Ok::<Event, Infallible>(Event::default().data("[DONE]")); };

let sse = Sse::new(event_stream) .keep_alive(axum::response::sse::KeepAlive::new().interval(Duration::from_secs(15))); let mut response = sse.into_response(); let headers = response.headers_mut(); headers.insert( "x-vercel-ai-ui-message-stream", HeaderValue::from_static("v1"), ); headers.insert( "cache-control", HeaderValue::from_static("no-cache, no-transform"), ); headers.insert("access-control-allow-origin", HeaderValue::from_static("*")); headers.insert("connection", HeaderValue::from_static("keep-alive"));

Ok(response)}

async fn options_handler() -> impl IntoResponse { Response::builder() .status(StatusCode::NO_CONTENT) .header("access-control-allow-origin", "*") .header("access-control-allow-headers", "content-type") .header("access-control-allow-methods", "POST, OPTIONS") .body(Body::empty()) .unwrap()}

async fn not_found() -> impl IntoResponse { let body = json!({"error": "Not found"}); Response::builder() .status(StatusCode::NOT_FOUND) .header("content-type", "application/json") .body(Body::from(body.to_string())) .unwrap()}

// ==== Server bootstrap ====

#[tokio::main]async fn main() -> Result<(), BoxedError> { dotenv().ok();

let app = Router::new() .route("/api/chat", post(chat_handler).options(options_handler)) .route("/", get(not_found));

let listener = TcpListener::bind(("0.0.0.0", 8000)).await?; println!("AI SDK UI example server listening on http://localhost:8000"); axum::serve(listener, app.into_make_service()).await?; Ok(())}