Tracing (OpenTelemetry)

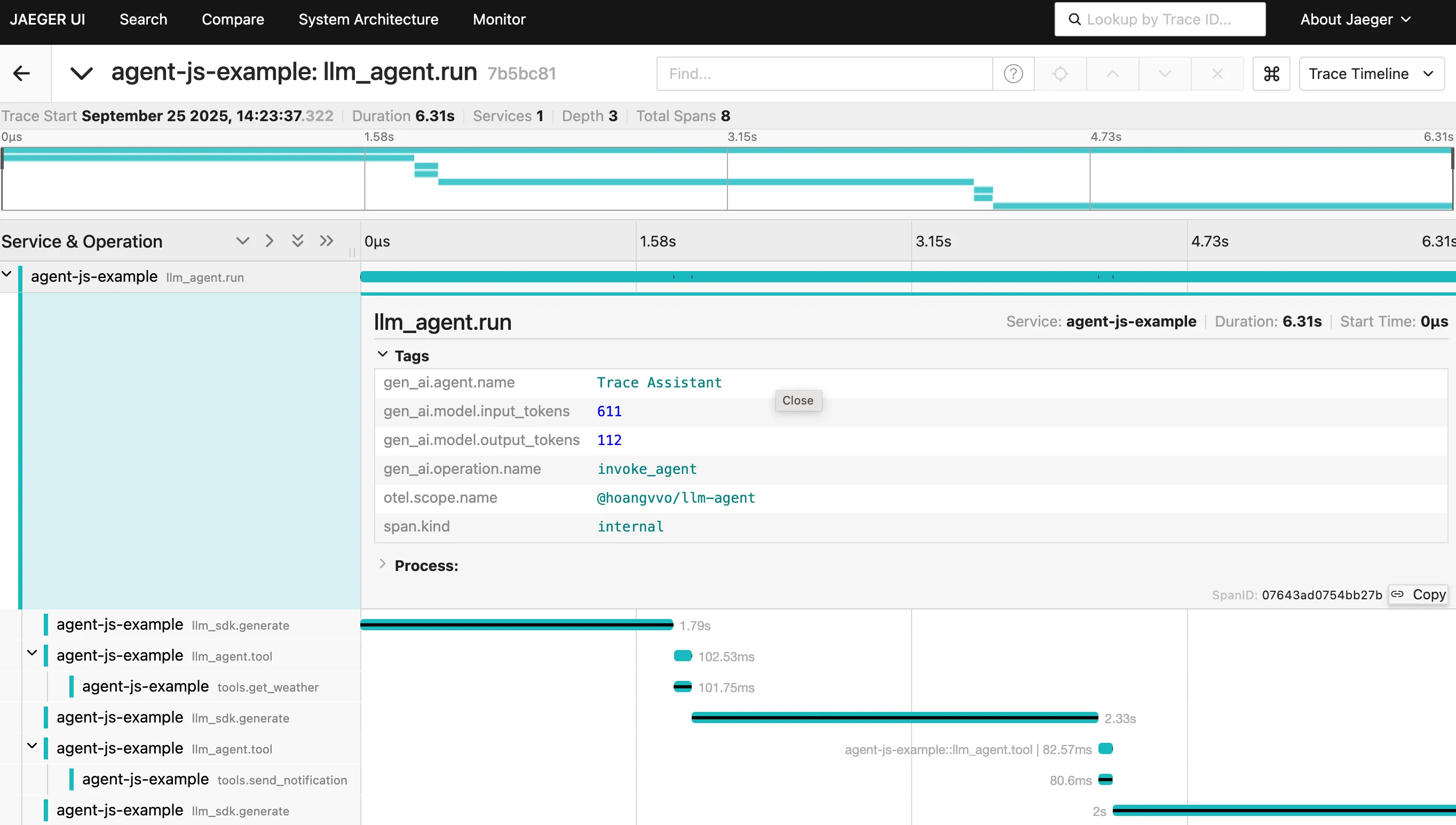

Distributed tracing lets you follow a single request as it fans out across services, spans, and asynchronous work. Each hop in that request emits a span – a timed record that captures what happened, how long it took, and any data you attach. When spans share a trace context, your observability backend can stitch them into a timeline that exposes latency hot spots, errors, and the path taken through your system.

All agent libraries emit OpenTelemetry spans that follow the Semantic Conventions for Generative AI Systems. You get high‑level visibility into the agent run itself, every tool invocation, and the underlying language model call made through the SDK. Because we rely on the standard OpenTelemetry APIs, any additional spans you create inside tool implementations (or downstream microservices they call) automatically participate in the same trace thanks to distributed tracing.

Agent library

Section titled “Agent library”Each run produces the spans below. Names and attributes are consistent across TypeScript, Rust, and Go.

| Span name | What it covers | Key attributes |

|---|---|---|

llm_agent.run | Entire non-streaming run, from invocation to completion | gen_ai.operation.name=invoke_agent, gen_ai.agent.name, aggregated gen_ai.model.* usage tokens, llm_agent.cost |

llm_agent.run_stream | Entire streaming run (start → final response/error) | Same attributes as llm_agent.run; emitted once the stream finishes or terminates with an error |

llm_agent.tool | Each tool execute call (success or failure) | gen_ai.operation.name=execute_tool, gen_ai.tool.call.id, gen_ai.tool.name, gen_ai.tool.description, gen_ai.tool.type=function, exception metadata when execute throws |

Tool spans wrap the entire execute handler, so any tracing you add inside runs as a child. If a tool calls out to other services that already emit telemetry, their spans join the same trace because the OpenTelemetry context is active for the duration of the tool call.

SDK language model client

Section titled “SDK language model client”The SDK instruments all language model calls. Whenever an agent (or your own application) calls a LanguageModel, you will see:

| Span name | What it covers | Key attributes (subset) |

|---|---|---|

llm_sdk.generate | Full synchronous generate call (start → finish/error) | gen_ai.operation.name=generate_content, gen_ai.provider.name, gen_ai.request.model, gen_ai.usage.input_tokens, gen_ai.usage.output_tokens, llm_sdk.cost, gen_ai.request.temperature, gen_ai.request.max_tokens, gen_ai.request.top_p, gen_ai.request.top_k, gen_ai.request.presence_penalty, gen_ai.request.frequency_penalty, gen_ai.request.seed |

llm_sdk.stream | Complete lifetime of stream (initial call + drain) | Same attributes as generate plus gen_ai.server.time_to_first_token and incremental cost/usage tallied from partials |

If an error bubbles up from the provider, the span status is set to ERROR and the exception is recorded, making it easy to spot call failures in tracing UIs.

Enabling tracing

Section titled “Enabling tracing”

The examples below show identical agents across TS, Rust, and Go. Each run checks the weather for Seattle, then sends it to a contact and produces spans beneath the agent root span.

import { Agent, tool, type AgentItem, type AgentResponse, type AgentToolResult,} from "@hoangvvo/llm-agent";import { SpanStatusCode, trace } from "@opentelemetry/api";import { OTLPTraceExporter } from "@opentelemetry/exporter-trace-otlp-http";import { resourceFromAttributes } from "@opentelemetry/resources";import { SimpleSpanProcessor } from "@opentelemetry/sdk-trace-base";import { NodeTracerProvider } from "@opentelemetry/sdk-trace-node";import { getModel } from "./get-model.ts";

const provider = new NodeTracerProvider({ resource: resourceFromAttributes({ "service.name": "agent-js-tracing-example", }), spanProcessors: [new SimpleSpanProcessor(new OTLPTraceExporter())],});

provider.register();

// We'll use this tracer inside tool implementations for nested spans.const tracer = trace.getTracer("examples/agent-js/tracing");

interface AgentContext { customer_name: string;}

const model = getModel("openai", "gpt-4o-mini");

const getWeatherTool = tool({ name: "get_weather", description: "Get the current weather for a city", parameters: { type: "object", properties: { city: { type: "string", description: "City to get the weather for" }, }, required: ["city"], additionalProperties: false, }, async execute({ city }: { city: string }) { return tracer.startActiveSpan( "tools.get_weather", async (span): Promise<AgentToolResult> => { try { // Record the city lookup while simulating work. span.setAttribute("weather.city", city); await new Promise((resolve) => setTimeout(resolve, 100)); return { content: [ { type: "text", text: JSON.stringify({ city, forecast: "Sunny", temperatureC: 24, }), }, ], is_error: false, }; } catch (error) { span.recordException(error as Error); span.setStatus({ code: SpanStatusCode.ERROR, message: String(error), }); throw error; } finally { span.end(); } }, ); },});

const notifyContactTool = tool({ name: "send_notification", description: "Send a text message to a recipient", parameters: { type: "object", properties: { phone_number: { type: "string" }, message: { type: "string" }, }, required: ["phone_number", "message"], additionalProperties: false, }, async execute({ phone_number, message, }: { phone_number: string; message: string; }) { return tracer.startActiveSpan( "tools.send_notification", async (span): Promise<AgentToolResult> => { try { // Capture metadata about the outbound notification. span.setAttribute("notification.phone", phone_number); span.setAttribute("notification.message_length", message.length); await new Promise((resolve) => setTimeout(resolve, 80)); return { content: [ { type: "text", text: JSON.stringify({ status: "sent", phone_number, message }), }, ], is_error: false, }; } catch (error) { span.recordException(error as Error); span.setStatus({ code: SpanStatusCode.ERROR, message: String(error), }); throw error; } finally { span.end(); } }, ); },});

const agent = new Agent<AgentContext>({ name: "Trace Assistant", model, instructions: [ // Keep these instructions aligned with the Rust/Go tracing examples. "Coordinate weather updates and notifications for clients.", "When a request needs both a forecast and a notification, call get_weather before send_notification and summarize the tool results in your reply.", ({ customer_name }) => `When asked to contact someone, include a friendly note from ${customer_name}.`, ], tools: [getWeatherTool, notifyContactTool],});

// Single-turn request that forces both tools to run.const items: AgentItem[] = [ { type: "message", role: "user", content: [ { type: "text", text: "Please check the weather for Seattle today and text Mia at +1-555-0100 with the summary.", }, ], },];

const response: AgentResponse = await agent.run({ context: { customer_name: "Skyline Tours" }, input: items,});

console.log(JSON.stringify(response.content, null, 2));

await provider.forceFlush();

await provider.shutdown();use dotenvy::dotenv;use futures::future::BoxFuture;use llm_agent::{Agent, AgentItem, AgentRequest, AgentTool, AgentToolResult, RunState};use llm_sdk::{ openai::{OpenAIModel, OpenAIModelOptions}, JSONSchema, Message, Part,};use opentelemetry::{trace::TracerProvider, KeyValue};use opentelemetry_otlp::{SpanExporter, WithHttpConfig};use opentelemetry_sdk::{trace::SdkTracerProvider, Resource};use reqwest::Client;use schemars::JsonSchema;use serde::Deserialize;use serde_json::{json, Value};use std::{error::Error, sync::Arc, time::Duration};use tokio::time::sleep;use tracing::{info, info_span, Level};use tracing_opentelemetry::OpenTelemetryLayer;use tracing_subscriber::layer::SubscriberExt;

#[derive(Clone)]struct TracingContext { customer_name: String,}

#[derive(Debug, Deserialize)]struct WeatherArgs { city: String,}

struct WeatherTool;

impl AgentTool<TracingContext> for WeatherTool { fn name(&self) -> String { "get_weather".into() }

fn description(&self) -> String { "Fetch a short weather summary for a city".into() }

fn parameters(&self) -> JSONSchema { json!({ "type": "object", "properties": { "city": { "type": "string", "description": "City to fetch the weather for" } }, "required": ["city"], "additionalProperties": false }) }

fn execute<'a>( &'a self, args: Value, _context: &'a TracingContext, _state: &'a RunState, ) -> BoxFuture<'a, Result<AgentToolResult, Box<dyn Error + Send + Sync>>> { Box::pin(async move { let params: WeatherArgs = serde_json::from_value(args)?; // Attach a child span so downstream work is correlated with the agent span. let span = info_span!("tools.get_weather", city = %params.city); let _guard = span.enter();

// simulate an internal dependency call while the span is active info!("looking up forecast"); sleep(Duration::from_millis(120)).await;

let payload = json!({ "city": params.city, "forecast": "Sunny", "temperature_c": 24, });

Ok(AgentToolResult { content: vec![Part::text(payload.to_string())], is_error: false, }) }) }}

#[derive(Debug, Deserialize, JsonSchema)]#[serde(deny_unknown_fields)]struct NotifyArgs { #[schemars(description = "The phone number to contact")] phone_number: String, #[schemars(description = "The message content")] message: String,}

struct NotifyTool;

impl AgentTool<TracingContext> for NotifyTool { fn name(&self) -> String { "send_notification".into() }

fn description(&self) -> String { "Send a short notification text message".into() }

fn parameters(&self) -> JSONSchema { schemars::schema_for!(NotifyArgs).into() }

fn execute<'a>( &'a self, args: Value, _context: &'a TracingContext, _state: &'a RunState, ) -> BoxFuture<'a, Result<AgentToolResult, Box<dyn Error + Send + Sync>>> { Box::pin(async move { let params: NotifyArgs = serde_json::from_value(args)?; let span = info_span!("tools.send_notification", phone = %params.phone_number); let _guard = span.enter();

// trace the internal formatting + dispatch work info!("formatting message"); sleep(Duration::from_millis(80)).await; span.record("notification.message_length", params.message.len()); info!("dispatching message");

let payload = json!({ "status": "sent", "phone_number": params.phone_number, "message": params.message, });

Ok(AgentToolResult { content: vec![Part::text(payload.to_string())], is_error: false, }) }) }}

fn init_tracing() -> Result<SdkTracerProvider, Box<dyn Error>> { let http_client = Client::builder().build()?;

let exporter = SpanExporter::builder() .with_http() .with_http_client(http_client) .build() .expect("Failed to create OTLP exporter");

let provider = SdkTracerProvider::builder() .with_simple_exporter(exporter) .with_resource( Resource::builder() .with_attribute(KeyValue::new("service.name", "agent-rust-tracing-example")) .build(), ) .build();

let tracer = provider.tracer("agent-rust.examples.tracing");

let subscriber = tracing_subscriber::registry() .with(tracing_subscriber::filter::LevelFilter::from_level( Level::DEBUG, )) .with(tracing_subscriber::fmt::layer()) .with(OpenTelemetryLayer::new(tracer));

tracing::subscriber::set_global_default(subscriber)?;

Ok(provider)}

#[tokio::main]async fn main() -> Result<(), Box<dyn Error>> { dotenv().ok(); let provider = init_tracing()?;

let model = Arc::new(OpenAIModel::new( "gpt-4o-mini", OpenAIModelOptions { api_key: std::env::var("OPENAI_API_KEY")?, ..Default::default() }, ));

let agent = Agent::<TracingContext>::builder("Trace Assistant", model) // Mirror the guidance used across the JS/Go tracing examples. .add_instruction("Coordinate weather updates and notifications for clients.") .add_instruction( "When a request needs both a forecast and a notification, call get_weather before \ send_notification and summarize the tool results in your reply.", ) .add_instruction(|ctx: &TracingContext| { Ok(format!( "When asked to contact someone, include a friendly note from {}.", ctx.customer_name )) }) .add_tool(WeatherTool) .add_tool(NotifyTool) .build();

let context = TracingContext { customer_name: "Skyline Tours".into(), };

let query = "Please check the weather for Seattle today and text Mia at +1-555-0100 with the summary."; let request = AgentRequest { context, input: vec![AgentItem::Message(Message::user(vec![Part::text(query)]))], };

let response = agent.run(request).await?; println!("Agent response: {response:#?}");

provider.force_flush().ok();

drop(provider); // ensure all spans are exported before exit

Ok(())}package main

import ( "context" "encoding/json" "fmt" "log" "os" "time"

llmagent "github.com/hoangvvo/llm-sdk/agent-go" llmsdk "github.com/hoangvvo/llm-sdk/sdk-go" "github.com/hoangvvo/llm-sdk/sdk-go/openai" "github.com/joho/godotenv" "go.opentelemetry.io/otel" "go.opentelemetry.io/otel/attribute" "go.opentelemetry.io/otel/exporters/otlp/otlptrace/otlptracehttp" "go.opentelemetry.io/otel/sdk/resource" sdktrace "go.opentelemetry.io/otel/sdk/trace")

var tracer = otel.Tracer("examples/agent-go/tracing")

func initTracing(ctx context.Context) (*sdktrace.TracerProvider, error) { // Configure an OTLP/HTTP exporter; defaults to OTEL_* environment variables when unset. exporter, err := otlptracehttp.New(ctx, otlptracehttp.WithInsecure()) if err != nil { return nil, fmt.Errorf("creating OTLP exporter: %w", err) }

tp := sdktrace.NewTracerProvider( sdktrace.WithBatcher(exporter), sdktrace.WithResource(resource.NewWithAttributes( "", attribute.String("service.name", "agent-go-tracing-example"), )), )

otel.SetTracerProvider(tp) return tp, nil}

type tracingContext struct { CustomerName string}

// Weather tool

type weatherArgs struct { City string `json:"city"`}

type weatherTool struct{}

func (t *weatherTool) Name() string { return "get_weather" }func (t *weatherTool) Description() string { return "Get the current weather for a city" }func (t *weatherTool) Parameters() llmsdk.JSONSchema { return llmsdk.JSONSchema{ "type": "object", "properties": map[string]any{ "city": map[string]any{ "type": "string", "description": "City to describe", }, }, "required": []string{"city"}, "additionalProperties": false, }}

func (t *weatherTool) Execute(ctx context.Context, payload json.RawMessage, _ tracingContext, _ *llmagent.RunState) (llmagent.AgentToolResult, error) { // Bridge this tool to tracing so the agent span includes internal work. _, span := tracer.Start(ctx, "tools.get_weather") defer span.End()

var args weatherArgs if err := json.Unmarshal(payload, &args); err != nil { return llmagent.AgentToolResult{}, err }

span.SetAttributes(attribute.String("weather.city", args.City)) // annotate the span with the lookup time.Sleep(120 * time.Millisecond)

result := map[string]any{ "city": args.City, "forecast": "Sunny", "temperature_c": 24, }

encoded, err := json.Marshal(result) if err != nil { return llmagent.AgentToolResult{}, err }

return llmagent.AgentToolResult{ Content: []llmsdk.Part{llmsdk.NewTextPart(string(encoded))}, IsError: false, }, nil}

// Notification tool

type notifyArgs struct { PhoneNumber string `json:"phone_number"` Message string `json:"message"`}

type notifyTool struct{}

func (t *notifyTool) Name() string { return "send_notification" }func (t *notifyTool) Description() string { return "Send an SMS notification" }func (t *notifyTool) Parameters() llmsdk.JSONSchema { return llmsdk.JSONSchema{ "type": "object", "properties": map[string]any{ "phone_number": map[string]any{ "type": "string", "description": "Recipient phone number", }, "message": map[string]any{ "type": "string", "description": "Message to send", }, }, "required": []string{"phone_number", "message"}, "additionalProperties": false, }}

func (t *notifyTool) Execute(ctx context.Context, payload json.RawMessage, _ tracingContext, _ *llmagent.RunState) (llmagent.AgentToolResult, error) { _, span := tracer.Start(ctx, "tools.send_notification") defer span.End()

var args notifyArgs if err := json.Unmarshal(payload, &args); err != nil { return llmagent.AgentToolResult{}, err }

// Annotate the span with useful metadata about the notification work. span.SetAttributes(attribute.String("notification.phone", args.PhoneNumber)) span.SetAttributes(attribute.Int("notification.message_length", len(args.Message))) time.Sleep(80 * time.Millisecond)

encoded, err := json.Marshal(map[string]any{ "status": "sent", "phone_number": args.PhoneNumber, "message": args.Message, }) if err != nil { return llmagent.AgentToolResult{}, err }

return llmagent.AgentToolResult{ Content: []llmsdk.Part{llmsdk.NewTextPart(string(encoded))}, IsError: false, }, nil}

func main() { ctx := context.Background() godotenv.Load("../.env")

tp, err := initTracing(ctx) if err != nil { log.Fatalf("failed to init tracing: %v", err) } defer func() { _ = tp.Shutdown(ctx) }()

apiKey := os.Getenv("OPENAI_API_KEY") if apiKey == "" { log.Fatal("OPENAI_API_KEY environment variable must be set") }

model := openai.NewOpenAIModel("gpt-4o-mini", openai.OpenAIModelOptions{APIKey: apiKey})

agent := llmagent.NewAgent("Trace Assistant", model, llmagent.WithInstructions( // Keep these aligned with the other language examples. llmagent.InstructionParam[tracingContext]{String: ptr("Coordinate weather updates and notifications for clients.")}, llmagent.InstructionParam[tracingContext]{String: ptr("When a request needs both a forecast and a notification, call get_weather before send_notification and summarize the tool results in your reply.")}, llmagent.InstructionParam[tracingContext]{Func: func(_ context.Context, c tracingContext) (string, error) { return fmt.Sprintf("When asked to contact someone, include a friendly note from %s.", c.CustomerName), nil }}, ), llmagent.WithTools(&weatherTool{}, ¬ifyTool{}), )

// Run a single turn that forces both tools to execute. req := llmagent.AgentRequest[tracingContext]{ Context: tracingContext{CustomerName: "Skyline Tours"}, Input: []llmagent.AgentItem{ llmagent.NewAgentItemMessage(llmsdk.NewUserMessage( llmsdk.NewTextPart("Please check the weather for Seattle today and text Mia at +1-555-0100 with the summary."), )), }, }

// This single call emits agent + tool spans under the configured exporter. resp, err := agent.Run(ctx, req) if err != nil { log.Fatalf("agent run failed: %v", err) }

fmt.Printf("Response: %+v\n", resp.Content)}

func ptr[T any](v T) *T { return &v }